The AI Revolution: From Defense Mechanism to Existential Threat.

What is Deepfake-as-a-Service (DaaS) and common uses as well as mitigation strategies.

Deepfake-as-a-Service “DaaS” refers to the growing ecosystem of accessible, on-demand tools and platforms that allow individuals, including criminals, to create highly realistic synthetic audio, video, and images with minimal technical expertise. This "democratization of deception" has significantly lowered the barrier to entry for sophisticated fraud and manipulation.

Overview

Traditionally, creating convincing deepfakes required significant technical skill, expensive hardware, and large datasets. DaaS platforms have commodified this process, offering easy-to-use interfaces, subscription APIs, or even dark web services for a fee that can range from a few hundred to thousands of dollars, depending on quality and complexity.

These services leverage advanced AI technologies, such as generative adversarial networks (GANs) and neural networks, to produce content that is often indistinguishable from authentic media to the human eye and even some detection systems.

Common Malicious Uses

The primary goal of DaaS abuse is often financial fraud and social engineering.

Financial Fraud/CEO Fraud: Impersonating executives (known as "deepfake whaling") in phone or video calls to trick employees into making unauthorized, high-value wire transfers.

Identity Theft and Verification Bypass: Generating fake identities or spoofing biometrics (like voice or facial recognition) to bypass Know Your Customer (KYC) checks for account creation or access sensitive systems.

Disinformation Campaigns: Creating fabricated videos of public figures making inflammatory statements to manipulate public opinion, damage reputations, or influence stock prices.

Extortion and Sextortion: Creating nonconsensual explicit deepfake content for blackmail or other forms of personal harm.

Defense and Mitigation Strategies

Combating DaaS requires a multi-layered approach, combining technical solutions, robust processes, and human vigilance.

Fortify/Bolster Processes: Implement mandatory, multi-person verification procedures for sensitive requests (e.g., large wire transfers). Use a different, pre-established communication channel to confirm requests, such as calling the person back on a known, trusted phone number.

Technical Controls: Employ AI-powered detection tools and liveness detection for biometric verification to identify digital artifacts or inconsistencies that indicate a deepfake. Industry standards like the Coalition for Content Provenance and Authenticity (C2PA) are also emerging to help verify media authenticity.

Evolve Security Awareness: Provide specific training for employees on how to spot deepfake threats and create clear incident response plans. Awareness of the threat landscape is crucial, as most people are not confident in their ability to distinguish real from cloned voices.

Autonomous Malware and Agentic AI Dominance

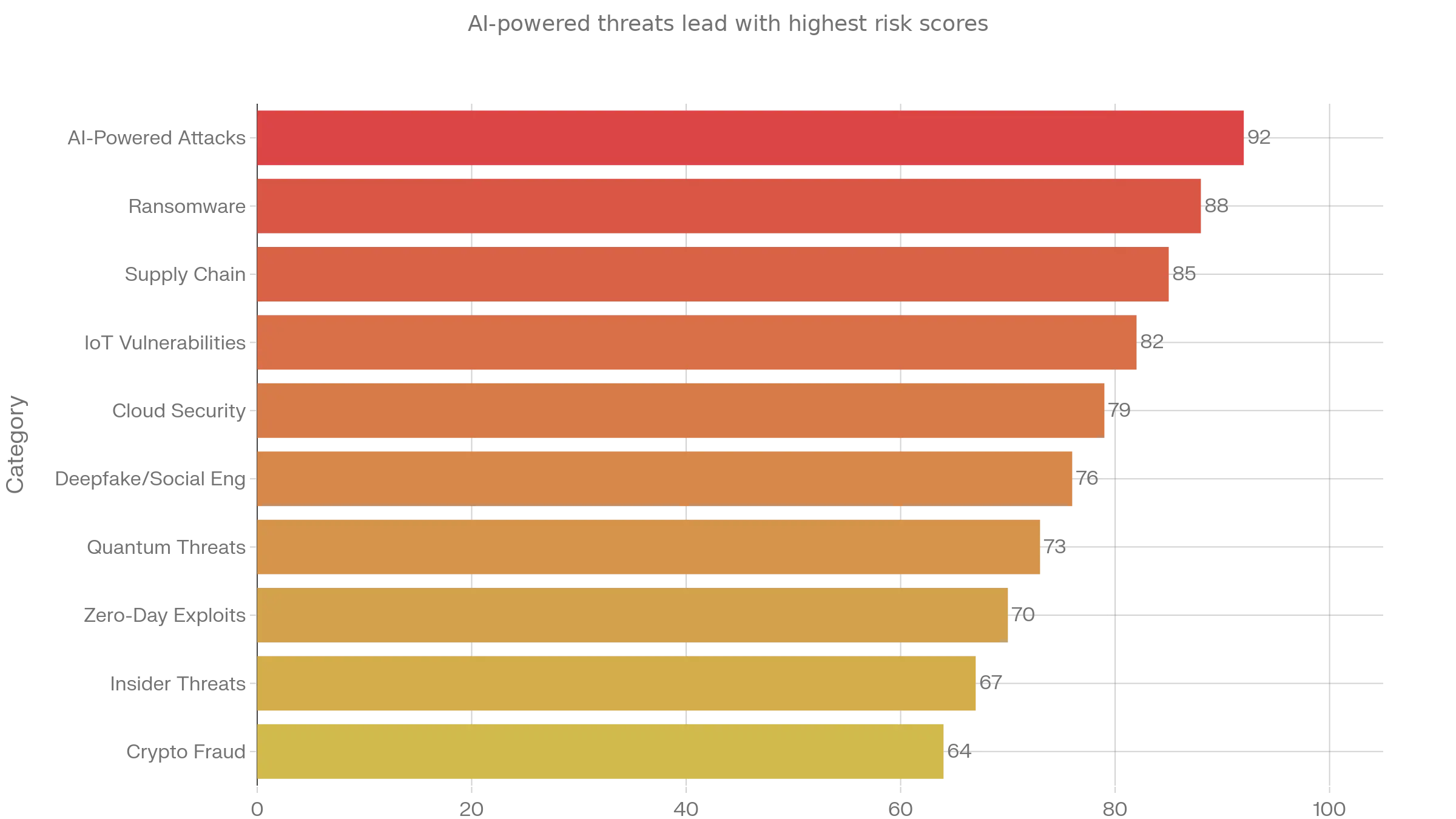

The most significant Cybersecurity Predictions 2026 trend centers on the industrialization of artificial intelligence in cyberattacks. Threat actors are deploying agentic AI—self-directed systems that autonomously plan, execute, and adapt campaigns without human intervention.

Unlike traditional scripted malware, these trained AI agents can analyze network defenses in real-time, modify payloads during attacks, and learn from detection responses to evolve their tactics. Said that at the same paste of AI advancement, same is true for attackers taking advantage of the negative side of autonomous agenctic AI by using their existing data which I think it’s unlimited which will result in different type of threats.

As artificial intelligence becomes deeply embedded in enterprise operations and cybercriminal arsenals alike, the Cybersecurity Predictions 2026 landscape reveals an unprecedented convergence of autonomous threats, identity-centric attacks, and accelerated digital transformation risks.

Threats are becoming more self-learning, self-executing, and faster than human response times. Instead of attacking networks directly, attackers focus on user identities, credentials, access tokens, and MFA fatigue* and session hijacking.

* MFA fatigue is a social engineering cyberattack where hackers repeatedly spam a user with Multi-Factor Authentication (MFA) push notifications until the frustrated victim approves one to make the alerts stop, granting the attacker access to their account.

How to protect against it

Security Awareness

Train users to recognize suspicious requests and never approve prompts they didn't initiate.

Technical Controls:

Contextual Info: Include location, time, or activity details in prompts.

Alternative Methods: Use time-based one-time passwords (TOTP) or Pass Keys.

Rate Limit: Limit the number of MFA verification text to end users.

What to do during an attack

Deny All: Decline every single notification.

Report: Immediately report the activity to your IT or security department.

Change Password: Reset your password immediately after the attack stops.

Some real life examples and predictions

Google’s Threat Intelligence Group documented the first large-scale cyberattack executed with minimal human oversight in September 2025, where AI systems autonomously targeted global entities.

By 2026, experts predict these autonomous threats will achieve full data exfiltration 100 times faster than human attackers, fundamentally rendering traditional playbooks obsolete. IBM’s analysis indicates that organizations face a new exposure problem: businesses will know data was compromised but won’t be able to trace which AI agents moved it, where it went, or why.

Palo Alto Networks predicts that by 2026, machine identities will outnumber human employees by 82 to 1, creating unprecedented opportunities for AI-driven identity fraud where a single forged identity can trigger cascades of automated malicious actions.

Deepfake-enabled vishing (voice phishing) surged by over 1,600% in the first quarter of 2025, with attackers leveraging voice cloning to bypass authentication systems and manipulate employees.

Ransomware continues its aggressive evolution, with Cybersecurity Predictions 2026 forecasting a 40% increase in publicly named victims by year’s end, rising from 5,010 in 2024 to over 7,000 in 2026. Commvault’s research demonstrates that agentic AI ransomware can reason, plan, and act autonomously, adapting attacks in real-time and learning from defenders faster than they can respond.

In a controlled testing and predictions by security experts, the frequency of ransomware attacks is projected to increase from one attack every 11 seconds in 2020 to one attack every 2 seconds by 2031.